Using this class, matrix calculations can be as simple as performing normal arithmetic like this:

- Code: Select all Expand view

matrix3 := matrix1 * matrix2

matrix3 is an FW_Matrix() object obtained by matrix multiplication of matrix1 and matrix2, which are FW_Matrix() objects.

Same way to get Inverse of a matrix

- Code: Select all Expand view

matrix_inverse := matrix1 ^ -1

A simple way to view a matrx object:

- Code: Select all Expand view

? oMatrix

While we can use corresponding methods of the class, in many cases using operators like above makes is much easier.

Example:

- Code: Select all Expand view

output := ( training_set_inputs * synaptic_weights ):Sigmoid()

synaptic_weights += ( training_set_inputs:Transpose() * ( training_set_outputs - output ) * output * output:Calc( { |x| 1 - x } ) )

In the above code, all variables are FW_Matrix objects.

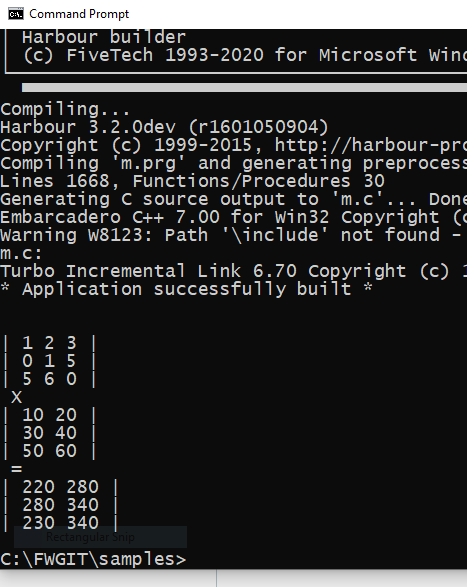

This is a simple sample program:

fwh\samples\matrix01.prg:

- Code: Select all Expand view

#include "fivewin.ch"

#xtranslate matrix( <a> ) => FW_Matrix():New( <a> )

//----------------------------------------------------------------------------//

function Main()

local M1, M2, M3, M4

M1 := matrix( { { 1, 2, 3 }, { 0, 1, 5 }, { 5, 6, 0 } } )

? "Matrix inversion:", M1, "^ -1 =", M2 := M1 ^ -1

? "Multiply with Inverse", M1, "X", M2, "=", M3 := M1 * M2

? "Is the product", M3, "Identity Matrix?", M3 == 1

M2 := matrix( { { 10,20, 30 }, { 40, 50, 60 } } )

? "Another matrix multiplication", M2, "X", M1, "=", M3 := M2 * M1

? "Check by dividing matrices", M3, "/", M1, "=", M4 := M3/M1

? "Is result correct?", M4, "==", M2, "=", M4 == M2

? "Scalar multiplication by 100:", M2, "X", 100, "=", M2 * 100

return nil

//----------------------------------------------------------------------------//

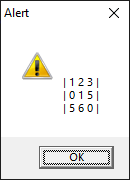

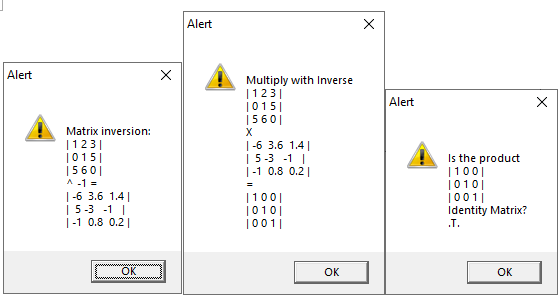

In the above sample, these lines of code:

- Code: Select all Expand view

M1 := matrix( { { 1, 2, 3 }, { 0, 1, 5 }, { 5, 6, 0 } } )

? "Matrix inversion:", M1, "^ -1 =", M2 := M1 ^ -1

? "Multiply with Inverse", M1, "X", M2, "=", M3 := M1 * M2

? "Is the product", M3, "Identity Matrix?", M3 == 1

produce this result:

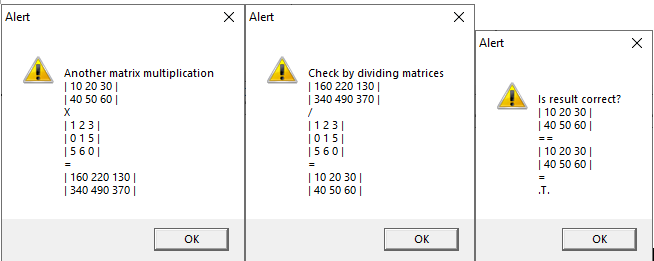

These lines of code:

- Code: Select all Expand view

M2 := matrix( { { 10,20, 30 }, { 40, 50, 60 } } )

? "Another matrix multiplication", M2, "X", M1, "=", M3 := M2 * M1

? "Check by dividing matrices", M3, "/", M1, "=", M4 := M3/M1

? "Is result correct?", M4, "==", M2, "=", M4 == M2

produce this result:

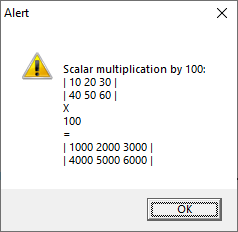

This last line of code:

- Code: Select all Expand view

? "Scalar multiplication by 100:", M2, "X", 100, "=", M2 * 100

produces this result:

Detailed documentation of the class FW_Matrix follows. In this class, all computations are performed in Harbour code. Though this is quite fast enough mostly, there can be more demanding applications that need complex matrix calculations performed in large loops. To support this, highly optimized C level functions are also provided.