https://deepai.org/machine-learning-glossary-and-terms/softmax-layer

https://github.com/ujhuyz0110/notes/blob/master/softmax_gradient.pdf

- Code: Select all Expand view

- function Main()

local aValues := { 8, 5, 1 }

local aResults := softMax( aValues )

local nVal

for each nVal in aResults

? nVal

next

return nil

function softMax( aValues )

local nSum := 0, nVal, aResults := Array( Len( aValues ) )

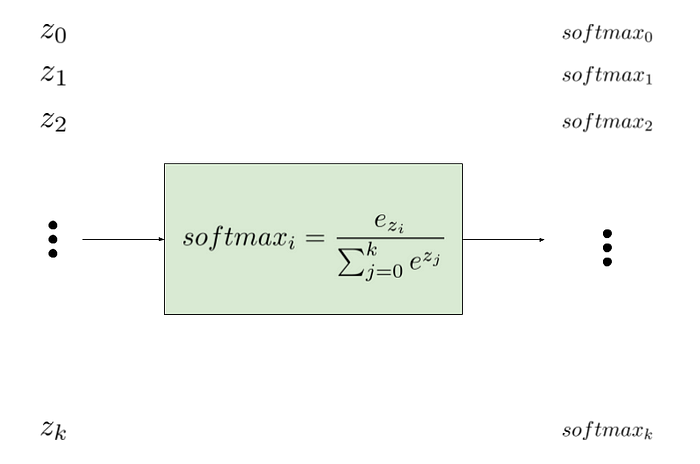

for each nVal in aValues

nSum += exp( nVal )

next

for each nVal in aValues

aResults[ nVal:__enumIndex ] = exp( nVal ) / nSum

next

return aResults