Michel,

good to hear that this works in practice as well.

I didn't even know that such large fields could be used.

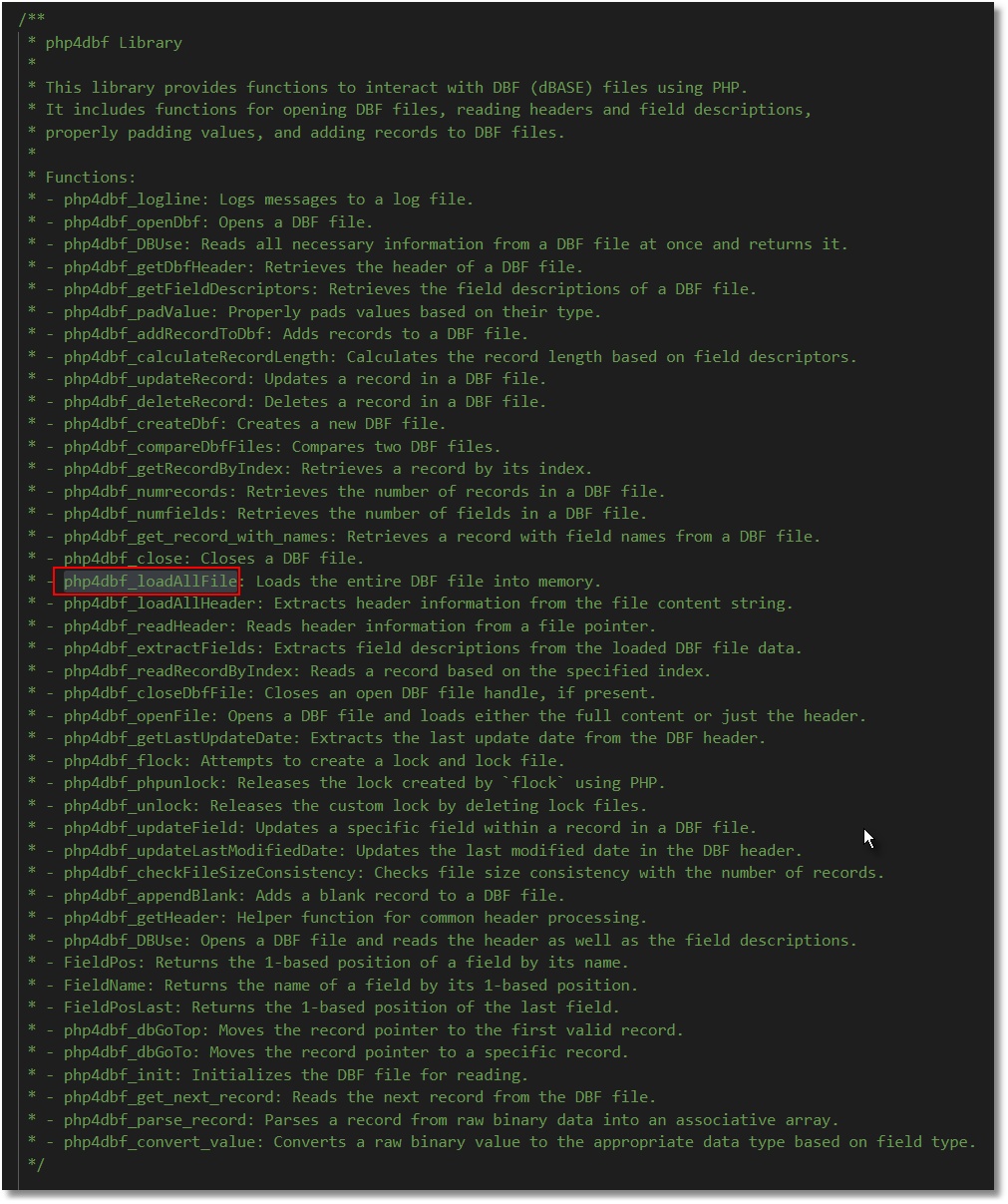

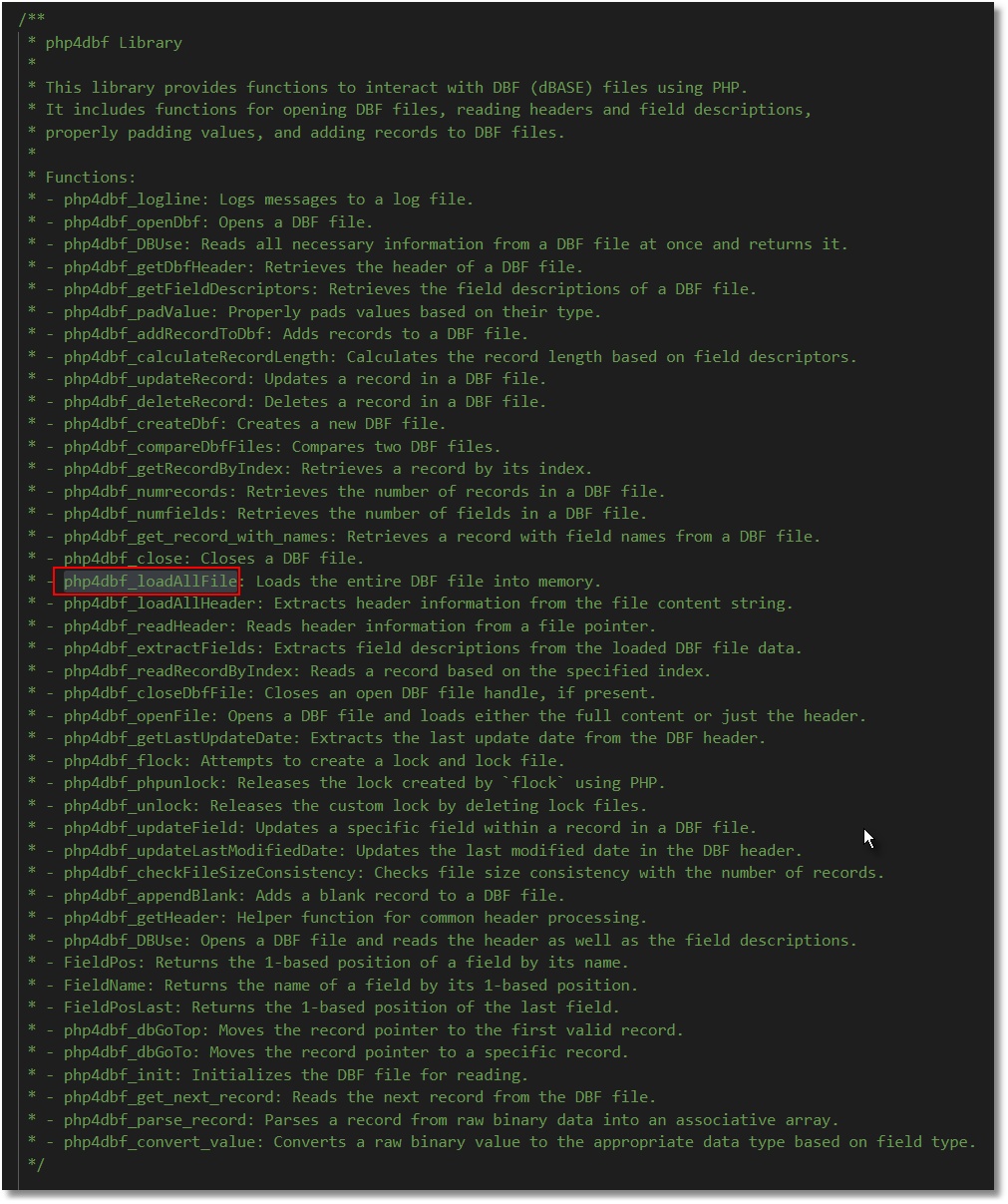

But now that I'm working more with the DBF format due to the php4dbf low-level access, there really seems to be no limit here.

Thank you for sharing this idea.I bypass the FTP issue with external files.

Have you never had problems with index files?

In the future, I also want to avoid using index files and instead create ad-hoc hashes or arrays with fields like index number and record number, and then only read those data from the DBF.

As I mentioned, I usually work with small databases. It works well and, as I believe, also quickly.

I think that if you use such large fields as you do, you have to read record by record from the disk and only take the fields you currently need to keep the payload as small as possible.

However, I have noticed almost no speed difference when I read the entire DBF and create the filter (which is practically an index) in memory, compared to reading record by record and applying the filter immediately.

Best regards,

Otto